The live data is ingested into discrete units called batches which are executed on Spark Core. Spark Streaming: It is the component that works on live streaming data to provide real-time analytics.It provides a platform for a wide variety of applications such as scheduling, distributed task dispatching, in-memory processing and data referencing. Spark Core: It is the foundation of Spark application on which other components are directly dependent.Hadoop, Data Science, Statistics & others Spark Ecosystem Components

Due to RDD, programming is easy as compared to Hadoop. It uses RDDs (Resilient Distributed Dataset) to delegate workloads to individual nodes that support iterative applications. It can run on Hadoop YARN (Yet Another Resource Negotiator), on Mesos, on EC2, on Kubernetes or using standalone cluster mode. It processes data from diverse data sources such as Hadoop Distributed File System (HDFS), Amazon’s S3 system, Apache Cassandra, MongoDB, Alluxio, Apache Hive. It performs in-memory processing which makes it more powerful and fast. Data scientists believe that Spark executes 100 times faster than MapReduce as it can cache data in memory whereas MapReduce works more by reading and writing on disks. It was developed to overcome the limitations in the MapReduce paradigm of Hadoop. It is a general-purpose cluster computing system that provides high-level APIs in Scala, Python, Java, and R.

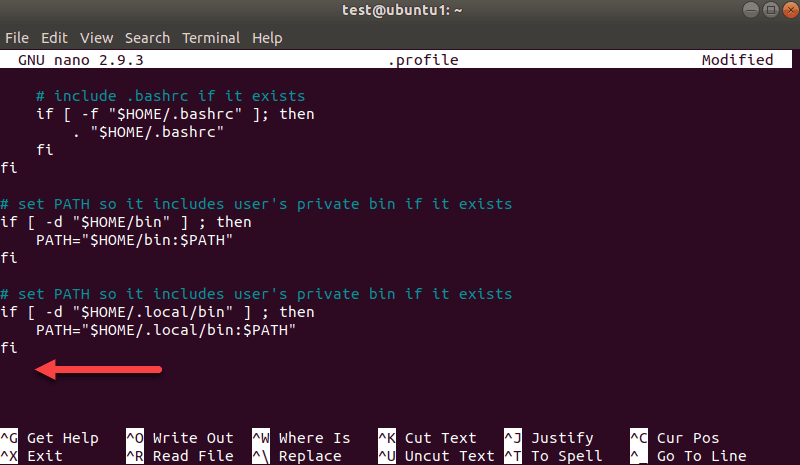

INSTALL PYSPARK ON UBUNTU COMMAND LINE SOFTWARE

It is a data processing engine hosted at the vendor-independent Apache Software Foundation to work on large data sets or big data. Spark is an open-source framework for running analytics applications.

0 kommentar(er)

0 kommentar(er)